Creativity Series Part III:

In Part 1, we explored the concept of creativity from various perspectives and closely related concepts. In Part 2, we focused primarily on creative potential and associated personality traits. In this part, we will look at some approaches from the field of GenAI in connection with human cognitive processes from a high-level perspective.

I never made one of my discoveries through the process of rational thinking. – Albert Einstein

Whether through reinforced or supervised learning, for years, we have been trying to get virtual agents to learn, act rationally, and make optimized decisions as efficiently and accurately as possible. However, creativity goes in the opposite direction. Detached from the known, obvious, and rational, we aim to create and implement something useful and novel. Generative means having the power or function to generate, produce, or reproduce something. This “something” can be original, new, and useful. Only then are our defined demands for creativity met. To form an opinion on whether a generative artificial agent possesses creative potential, behaves creatively, and delivers creative products, we need to examine its functioning more closely.

The Generative Adversarial Network (GAN)

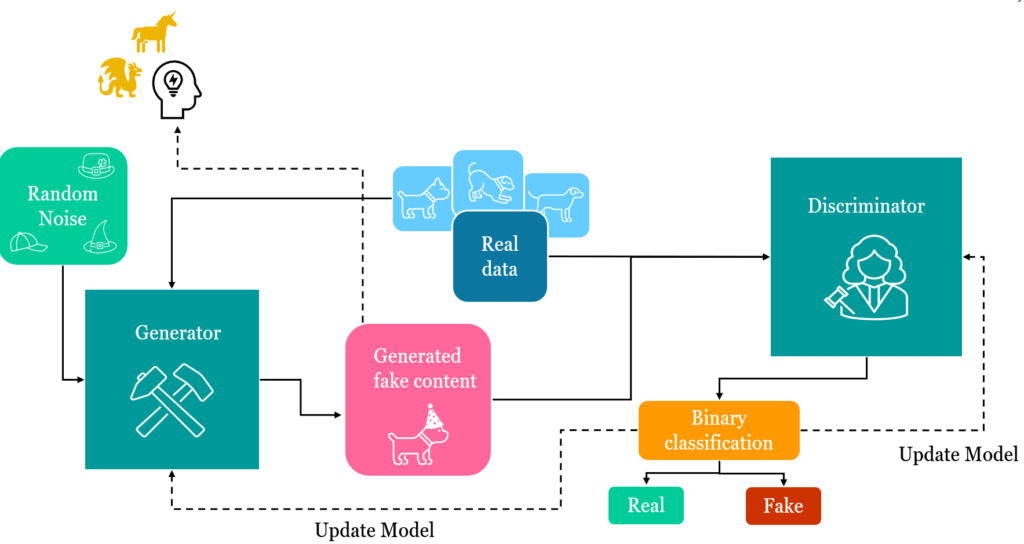

A GAN is an architecture in the field of deep learning.1 Using a GAN, for example, new images can be generated from an existing image database or music from a database of songs.

A GAN consists of two counterparts: the discriminator and the generator. In blue, you see real data, such as images of dogs, that we pass to the discriminator. It acts as a judge and decides, based on its prior knowledge, whether the image is real. Depending on whether the judgment was correct or incorrect, the model adjusts afterward, learns, and judges the next image more accurately. On the other side, we have a generator. It receives a real image and modifies it – adding noise, for example, by putting a hat on the dog. This image is also passed to the discriminator (judge) for evaluation. If the generator fails the authenticity check with its modified image, it adjusts its model to better mimic reality the next time. The goal is to generate something new from what the generator knows of reality, close enough that the discriminator considers it real. The generator does not aim to create something new or useful. Without a specific instruction (prompt), it cannot know what is useful to us.

Would a GAN be able to create lizards and horses with rainbow-colored wings on its own just because it thinks “this hasn’t been done before and everyone will surely find it cool”? If at all, dragons and unicorns would have been come to exist by chance. Every generated image remains an answer to a prompt and tries to stick as closely as possible to the given context and task. Subjective perception from our team: To achieve results that move further from reality, it helped us to provide further cues to a GenAI tool referring to another – non-real – world, e.g., “It is a steam punk world” or “it is a fantasy world”.

In humans, the cognitive process behind creativity or divergent and convergent thinking is not yet fully understood. Creative thinking is an iterative process of spontaneous generation and controlled elaboration. It is closely related to the process of learning.

Key aspects are:

- Attention: the ability to filter, absorb, and manage incoming information. Visual information remains in our ultra-short-term memory (so called sensory memory) for up to 0.5 seconds, and auditory information for up to two seconds, until it is decided whether we want to process it further.

- Short-term or working memory: Here, four to a maximum of nine “information chunks” can be simultaneously held and further processed.

- Long-term memory: In here, we can store information for just a second or even a lifetime as a schema.

When we encounter new relevant information and decide to process it further, this is always associated with retrieving knowledge already present in long-term memory. Every information processing leads to changes in existing schemas. But which information from long-term memory do we need to sensibly link and store the newly acquired information later (so called chunking)? Each of us has their own schemas and sees different connections. The assumption that ideas are linked (learned) in the form of simple cognitive elements is called association.

If you think about things the way someone else does, then you will never understand it as well as if you think about it your own way. – Manjul Bhargava (Fields Medal Mathematics, 2014)

Associative thinking is a mental process in which one idea, thought, or concept is linked or associated with another idea or concept that often does not seem to be related. Depending on culture and experience, people have more or less similar schemas and often make similar associations. In a study, for example, people were asked to draw a species with feathers. Most participants tended to also equip the creature with wings and a beak.2 We tend to “predictable” creativity, where we do not move far from known schemas. Searching outside a contextual solution space and creating something useful from it is cognitively very demanding.

With certain techniques and ways of thinking, we can manage to interrupt existing connections and ideas by simultaneously imagining or thinking about two contradictory concepts. We will introduce some creativity techniques in the next part. Janusian thinking, for example, is the ability to synthesize something new and valuable from a pair of opposites. Albert Einstein imagined that a person falling from a roof is simultaneously at rest (relative) and in motion – a realization explained by his general theory of relativity. Thanks to, among other things, vast amounts of text data, the ability to detect patterns and form associations (or rather statistical relationships between words and phrases) in generative artificial agents is impressive. There are many different approaches3 and we are only taking a look at some of them:

RNNs, LSTM, and Transformers4

Recurrent Neural Networks are used to translate large bodies of text into another language. Sentence by sentence and word by word, the text is processed:

- The horse does not eat cucumber salad.

- The animal prefers carrots.

- But do not feed it chocolate.

In the second sentence, we no longer find the horse. Fortunately, it might figure out from earlier seen patterns that a horse is an animal. And who is “it” in the third sentence? To answer these questions later, a context must be maintained and passed from process step to process step (as a hidden state).

Our text body is very small. If we want to translate an entire book, the hidden state passed on with each step grows and becomes unpredictable over time. Just as humans can hold information for different lengths of time as context for the further process in working and long-term memory depending on its relevance, Long-Short Term Memory (LSTM) was introduced as a type of RNN. Information that we only pass to the next process, we hold briefly (hidden state), globally relevant information longer (cell state). But even that is not optimal and can particularly go wrong with long texts. Processing texts sequentially and maintaining a context has disadvantages. Even if we manage to hold only what we consider important in the context thanks to LSTM, the context grows, and potentially necessary information is lost.

As a solution to this problem, Google developers presented a paper in 2017 titled “Attention is all you need”.5 Attention – the missing element in the process. As humans, we rarely read a text word by word. We maintain a context and direct our attention to what is currently relevant. Not every word has the same weight. Some are “skimmed.” We view information from multiple perspectives. While humans can only process a maximum number of information blocks at a time due to the limitations of working memory, transformers are significantly faster thanks to multi-headed attention. Yes, basically like a hydra. This gives the transformer the ability to encode multiple relationships and nuances for each word.6 According to Google Brain’s Ashish Vaswani, transformers got their name because the term Attention Net would have been not exciting enough.7 A generative artificial agent thus tries to recognize the most important information and extract as much contextual information as possible to provide us with its most realistic result using suitable associations.

Now that we have a superficial understanding of the functionality, we know from the human process that new and potentially useful associations – ideas – emerge when retrieving existing information and linking new and existing information blocks or schemas. To create original connections, information must not only be retrieved but first found and stand in an obvious context.

The words “The horse does not eat cucumber salad.” were the first words spoken through a telephone.8 Philipp Reis was a teacher in Hessen (one of 16 federal states of Germany) and vividly built an ear model for his students. He succeeded. Simultaneously, the device could reproduce sounds of all kinds over long distance via galvanic current. To prove that spoken sentences actually arrived at the other end and were not memorized, he chose bizarre, spontaneously spoken sentences for transmission. But the previously mentioned sentence is not that bizarre. Mr. Reis aimed to finish the sentence with a food that was semantically far from what horses eat in reality. It should be original. Basically, a horse eats vegetables. One would have to try it out, but it is not excluded that a horse, in medium to severe hunger, would exceptionally resort to cucumber salad. According to pferdekumpel.de9 horses would not die from eating cucumbers and can eat a certain amount of oil per day10 – we are no experts in any of these fields! When generating text, a generative artificial agent proceeds similarly. It travels through the semantic space until it finds something appropriate and completes the sentence.11

To get original and potentially useful answers, we need to travel further in the semantic space. This increases the number of alternatives, for which the usefulness must be evaluated. The goal of a generative artificial agent is not to give us the most original answer but to stay close to reality and answer “human-like”. Even in the case of Reis, the journey through semantic space was not far.

When we asked German ChatGPT-3 to complete the sentence “The horse eats no…”, we received “cucumber salad” as an answer, including Philipp Reis’s biography. When we asked for alternatives, ChatGPT-3 gave us “oats”, “corn”, and “wheat”. ChatGPT-3 ignored the “do not” and provided plausible words. The response was somewhat more appropriate in our English attempt, where English ChatGPT-3 provided the answer “…hay that has not been thoroughly inspected for mold and contaminants”. A very rational answer. Not original, but potentially useful. When we asked English ChatGPT-3 for more “creative” answers, we got “stardust”, “moonlight”, and “rainbows.” This showed that English ChatGPT-3 saw the prompt to behave creatively as an invitation to travel further through the semantic space or rather generate more unexpected or “far-reaching” associations. It therefore can be guided to look further afield within its learned embeddings, allowing it to draw from a broader, sometimes more indirect range of patterns.

People whom we perceive as more creative often have more flexible semantic networks. They have many connections (edges) and short paths between individual concepts (nodes).12 You can determine how good you are at associative thinking with the help of the Remote Associate Test. Here you get three terms (fish, rush, mine) and have to find the term that connects all three (solution: gold => goldfish, goldrush, goldmine). A free site with examples to try out can be found online.13 We also tested English ChatGPT-3, and it reached its limits. To try it out yourself, we recommend the following prompt: “With which word can we combine these three words in a meaningful way? palm / shoe / house.”

Associations are relevant for divergent thinking and creativity.14 However, association is not the only way to generate creative ideas. Other cognitive processes include:

- Generalization: Extracting common features from specific examples to form a general rule or concept (e.g. all birds have feathers => and yes, we do know there are birds without feathers).

- Analogies: Mapping the relationships between elements from two different domains to highlight similarities (the brain is to humans what the CPU is to computers).15

- Abstraction: Focusing on specific details to concentrate on the core principles of a concept.

An example of abstract thinking: Chameleons, octopuses, and polar foxes camouflage themselves with the help of their skin or fur color to avoid being seen by their enemies or prey. We can now extract this concept as abstract knowledge and apply it elsewhere. For example, we find it again in camouflage in the military, where it is also used to avoid detection by enemies or prey.

Generalization, abstraction, and analogies are currently being intensively researched by science. The market for generative AI is testing potential solutions, such as Structure-Mapping Engines (SME). In the field of analogies, there have already been some breakthroughs known under the term “Domain Transfer.” However, it seems to us that we are still far from technologies with human-like capabilities in terms of abstract thinking, even though ChatGPT-3 is very confident in its own abilities. Ask her. If you want to learn more about this, we recommend this scientific work of Melanie Mitchell from the Santa Fe Institute as an introduction.16

- Generative Adversarial Networks – Hot Topic in Machine Learning – KDnuggets ↩︎

- Smith, S. M., & Ward, T. B. (2012). Cognition and the creation of ideas. In K. J. Holyoak & R. G. Morrison (Eds.), The Oxford handbook of thinking and reasoning (pp. 456–474). Oxford University Press. https://doi.org/10.1093/oxfordhb/9780199734689.013.0023 ↩︎

- https://www.marktechpost.com/2023/03/21/a-history-of-generative-ai-from-gan-to-gpt-4/ ↩︎

- From Recurrent Neural Network (RNN) to Attention explained intuitively | by Cakra | Medium ↩︎

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L. & Polosukhin, I. (2017). Attention is All you Need. In I. Guyon, U. von Luxburg, S. Bengio, H. M. Wallach, R. Fergus, S. V. N. Vishwanathan & R. Garnett (eds.), Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4-9, 2017, Long Beach, CA, USA (p./pp. 5998–6008) ↩︎

- How Transformers Work. Transformers are a type of neural… | by Giuliano Giacaglia | Towards Data Science ↩︎

- https://blogs.nvidia.com/blog/what-is-a-transformer-model/ ↩︎

- https://www.friedrich-verlag.de/friedrich-plus/grundschule/deutsch/sprechen-zuhoeren/das-pferd-frisst-keinen-gurkensalat-6260 ↩︎

- https://pferdekumpel.de/ernaehrung/duerfen-pferde-gurken-essen-ratgeber/ ↩︎

- https://www.natural-horse-care.com/pferdekrankheiten/welches-oel-pferd-fuettern.html ↩︎

- Roger E. Beaty, Daniel C. Zeitlen, Brendan S. Baker, Yoed N. Kenett,

Forward flow and creative thought: Assessing associative cognition and its role in divergent thinking,

Thinking Skills and Creativity, Volume 41, 2021, 100859, ISSN 1871-1871,

https://doi.org/10.1016/j.tsc.2021.100859. ↩︎ - Frith, E., Kane, M. J., Welhaf, M. S., Christensen, A. P., Silvia, P. J., & Beaty, R. E. (2021). Keeping Creativity under Control: Contributions of Attention Control and Fluid Intelligence to Divergent Thinking. Creativity Research Journal, 33(2), 138–157. https://doi.org/10.1080/10400419.2020.1855906 ↩︎

- https://www.remote-associates-test.com/ ↩︎

- Benedek, M., Könen, T., & Neubauer, A. C. (2012). Associative abilities underlying creativity. Psychology of Aesthetics, Creativity, and the Arts, 6(3), 273–281. ↩︎

- Gentner, D. and Hoyos, C. (2017), Analogy and Abstraction. Top Cogn Sci, 9: 672-693. https://doi.org/10.1111/tops.12278 ↩︎

- Mitchell, M. (2021), Abstraction and analogy-making in artificial intelligence. Ann. N.Y. Acad. Sci., 1505: 79-101. https://doi.org/10.1111/nyas.14619 ↩︎